如何下载及使用通义千问7B开源大模型

发布时间:2024年06月06日

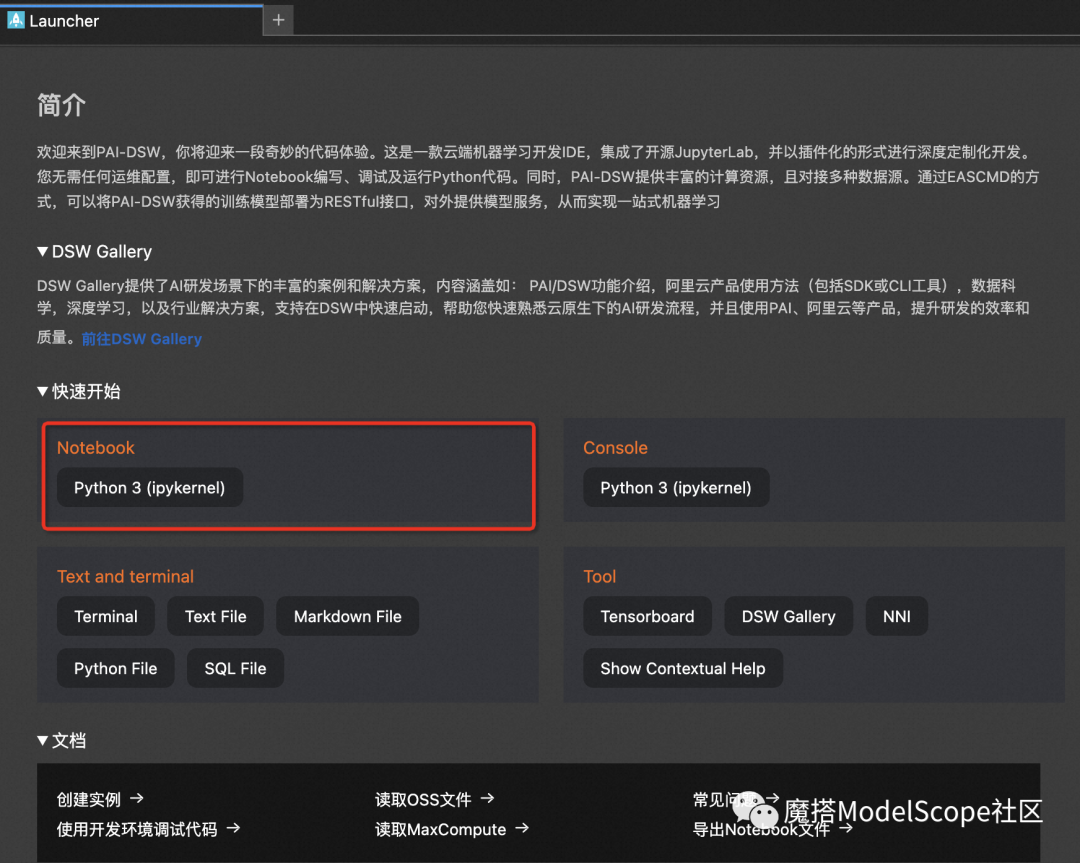

环境配置与安装

模型链接及下载

from modelscope.hub.snapshot_download import snapshot_download

model_dir = snapshot_download('Qwen/Qwen-7b-chat', 'v1.0.0')

def get_model_tokenizer_Qwen(model_dir: str,

torch_dtype: Dtype,

load_model: bool = True):

config = read_config(model_dir)

logger.info(config)

model_config = QwenConfig.from_pretrained(model_dir)

model_config.torch_dtype = torch_dtype

logger.info(model_config)

tokenizer = QwenTokenizer.from_pretrained(model_dir)

model = None

if load_model:

model = Model.from_pretrained(

model_dir,

cfg_dict=config,

config=model_config,

device_map='auto',

torch_dtype=torch_dtype)

return model, tokenizer

get_model_tokenizer_Qwen(model_dir, torch.bfloat16)

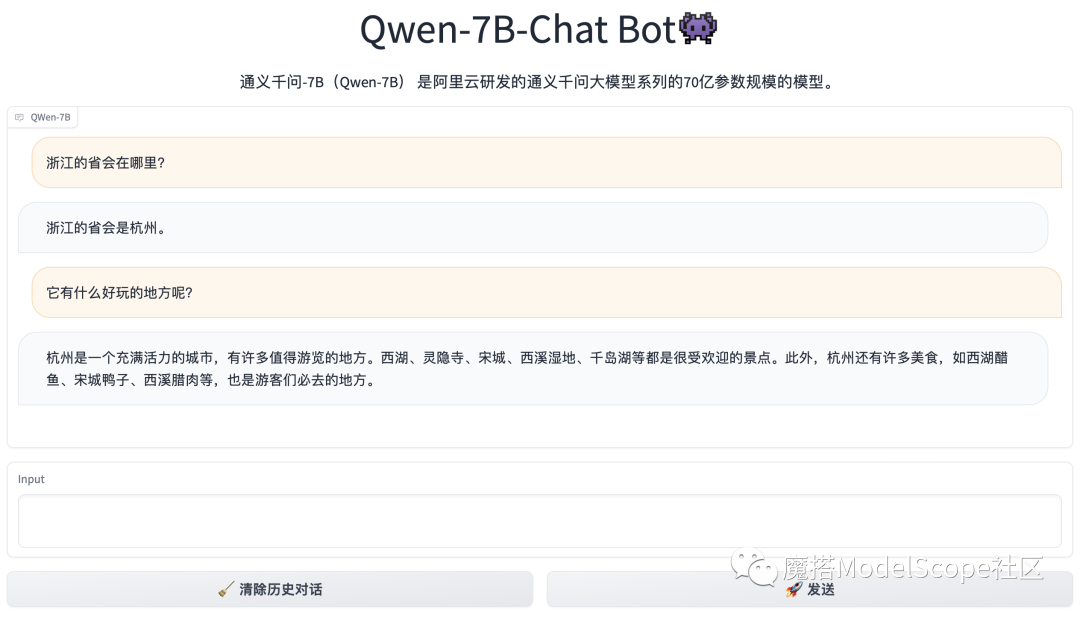

创空间体验

模型推理

import os

os.environ['CUDA_VISIBLE_DEVICES'] = '0'

from modelscope.pipelines import pipeline

from modelscope.utils.constant import Tasks

model_id = 'Qwen/Qwen-7b-chat'

pipe = pipeline(

task=Tasks.chat, model=model_id, device_map='auto')

history = None

system = 'You are a helpful assistant.'

text = '浙江的省会在哪里?'

results = pipe(text, history=history, system=system)

response, history = results['response'], results['history']

print(f'Response: {response}')

text = '它有什么好玩的地方呢?'

results = pipe(text, history=history, system=system)

response, history = results['response'], results['history']

print(f'Response: {response}')

"""

Response: 浙江的省会是杭州。

Response: 杭州是一座历史悠久、文化底蕴深厚的城市,拥有许多著名景点,如西湖、西溪湿地、

灵隐寺、千岛湖等,其中西湖是杭州最著名的景点,被誉为“天下第一湖”。此外,杭州还有许多古迹、

文化街区、美食和艺术空间等,值得一去。

"""

import os

os.environ['CUDA_VISIBLE_DEVICES'] = '0'

from modelscope.pipelines import pipeline

from modelscope.utils.constant import Tasks

model_id = 'Qwen/Qwen-7b'

pipeline_ins = pipeline(

task=Tasks.text_generation, model=model_id, device_map='auto')

text = '蒙古国的首都是乌兰巴托(Ulaanbaatar)\n冰岛的首都是雷克雅未克(Reykjavik)\n埃塞俄比亚的首都是'

result = pipeline_ins(text)

print(result['text'])

SFT数据集链接和下载

from modelscope import MsDataset

finance_en = MsDataset.load(

'wyj123456/finance_en', split='train').to_hf_dataset()

print(len(finance_en["instruction"]))

print(finance_en[0])

"""Out

68912

{'instruction': 'For a car, what scams can be plotted with 0% financing vs rebate?',

'input': None, 'output': "The car deal makes money 3 ways. If you pay in one lump payment.

If the payment is greater than what they paid for the car, plus their expenses,

they make a profit. They loan you the money. You make payments over months or years,

if the total amount you pay is greater than what they paid for the car, plus their expenses,

plus their finance expenses they make money. Of course the money takes years to come in,

or they sell your loan to another business to get the money faster but in a smaller amount.

You trade in a car and they sell it at a profit. Of course that new transaction could be a

lump sum or a loan on the used car... They or course make money if you bring the car back

for maintenance, or you buy lots of expensive dealer options. Some dealers wave two deals

in front of you: get a 0% interest loan. These tend to be shorter 12 months vs 36,48,60

or even 72 months. The shorter length makes it harder for many to afford. If you can't

swing the 12 large payments they offer you at x% loan for y years that keeps the payments

in your budget. pay cash and get a rebate. If you take the rebate you can't get the 0% loan.

If you take the 0% loan you can't get the rebate. The price you negotiate minus the rebate

is enough to make a profit. The key is not letting them know which offer you are interested in.

Don't even mention a trade in until the price of the new car has been finalized.

Otherwise they will adjust the price, rebate, interest rate, length of loan,

and trade-in value to maximize their profit. The suggestion of running the numbers through a

spreadsheet is a good one. If you get a loan for 2% from your bank/credit union for 3 years

and the rebate from the dealer, it will cost less in total than the 0% loan from the dealer.

The key is to get the loan approved by the bank/credit union before meeting with the dealer.

The money from the bank looks like cash to the dealer.", 'text': None}

"""

模型训练最佳实践

# 获取示例代码

git clone https://github.com/modelscope/swift.git

cd swift/examples/pytorch/llm

# sft

bash run_sft.sh

具体的代码部分:

导入相关的库

# note: utils can be found ata

# `https://github.com/modelscope/swift/tree/main/examples/pytorch/llm/utils`

# it is recommended that you git clone the swift repo

import os

from dataclasses import dataclass, field

from functools import partial

from types import MethodType

from typing import List, Optional

import torch

from torch import Tensor

from utils import (DATASET_MAPPING, DEFAULT_PROMPT, MODEL_MAPPING,

get_dataset, get_model_tokenizer, plot_images,

process_dataset, select_dtype)

from swift import (HubStrategy, Seq2SeqTrainer,

Seq2SeqTrainingArguments,

get_logger)

from swift.utils import (add_version_to_work_dir, parse_args,

print_model_info, seed_everything,

show_freeze_layers)

from swift.utils.llm_utils import (data_collate_fn, print_example,

stat_dataset, tokenize_function)

logger = get_logger()

命令行参数定义

@dataclass

class SftArguments:

model_type: str = field(

default='Qwen-7b',

metadata={'choices': list(MODEL_MAPPING.keys())})

sft_type: str = field(

default='lora', metadata={'choices': ['lora', 'full']})

output_dir: Optional[str] = None

seed: int = 42

resume_from_ckpt: Optional[str] = None

dtype: Optional[str] = field(

default=None, metadata={'choices': {'bf16', 'fp16', 'fp32'}})

ignore_args_error: bool = False # True: notebook compatibility

dataset: str = field(

default='finance-en',

metadata={'help': f'dataset choices: {list(DATASET_MAPPING.keys())}'})

dataset_seed: int = 42

dataset_sample: Optional[int] = None

dataset_test_size: float = 0.01

prompt: str = DEFAULT_PROMPT

max_length: Optional[int] = 2048

lora_target_modules: Optional[List[str]] = None

lora_rank: int = 8

lora_alpha: int = 32

lora_dropout_p: float = 0.1

gradient_checkpoint: bool = True

batch_size: int = 1

num_train_epochs: int = 1

optim: str = 'adamw_torch'

learning_rate: Optional[float] = None

weight_decay: float = 0.01

gradient_accumulation_steps: int = 16

max_grad_norm: float = 1.

lr_scheduler_type: str = 'cosine'

warmup_ratio: float = 0.1

eval_steps: int = 50

save_steps: Optional[int] = None

save_total_limit: int = 2

logging_steps: int = 5

push_to_hub: bool = False

# 'user_name/repo_name' or 'repo_name'

hub_model_id: Optional[str] = None

hub_private_repo: bool = True

hub_strategy: HubStrategy = HubStrategy.EVERY_SAVE

# None: use env var `MODELSCOPE_API_TOKEN`

hub_token: Optional[str] = None

def __post_init__(self):

if self.sft_type == 'lora':

if self.learning_rate is None:

self.learning_rate = 1e-4

if self.save_steps is None:

self.save_steps = self.eval_steps

elif self.sft_type == 'full':

if self.learning_rate is None:

self.learning_rate = 1e-5

if self.save_steps is None:

# Saving the model takes a long time

self.save_steps = self.eval_steps * 4

else:

raise ValueError(f'sft_type: {self.sft_type}')

if self.output_dir is None:

self.output_dir = 'runs'

self.output_dir = os.path.join(self.output_dir, self.model_type)

if self.lora_target_modules is None:

self.lora_target_modules = MODEL_MAPPING[

self.model_type]['lora_TM']

self.torch_dtype, self.fp16, self.bf16 = select_dtype(

self.dtype, self.model_type)

if self.hub_model_id is None:

self.hub_model_id = f'{self.model_type}-sft'

导入模型

seed_everything(args.seed)

# ### Load Model and Tokenizer

model, tokenizer = get_model_tokenizer(args.model_type, torch_dtype=args.torch_dtype)

if args.gradient_checkpoint:

model.gradient_checkpointing_enable()

model.enable_input_require_grads()

准备LoRA

# ### Prepare lora

if args.sft_type == 'lora':

from swift import LoRAConfig, Swift

if args.resume_from_ckpt is None:

lora_config = LoRAConfig(

r=args.lora_rank,

target_modules=args.lora_target_modules,

lora_alpha=args.lora_alpha,

lora_dropout=args.lora_dropout_p)

logger.info(f'lora_config: {lora_config}')

model = Swift.prepare_model(model, lora_config)

else:

model = Swift.from_pretrained(

model, args.resume_from_ckpt, is_trainable=True)

show_freeze_layers(model)

print_model_info(model)

# check the device and dtype of the model

_p: Tensor = list(model.parameters())[-1]

logger.info(f'device: {_p.device}, dtype: {_p.dtype}')

导入数据集

# ### Load Dataset

dataset = get_dataset(args.dataset.split(','))

train_dataset, val_dataset = process_dataset(dataset,

args.dataset_test_size,

args.dataset_sample,

args.dataset_seed)

tokenize_func = partial(

tokenize_function,

tokenizer=tokenizer,

prompt=args.prompt,

max_length=args.max_length)

train_dataset = train_dataset.map(tokenize_func)

val_dataset = val_dataset.map(tokenize_func)

del dataset

# Data analysis

stat_dataset(train_dataset)

stat_dataset(val_dataset)

data_collator = partial(data_collate_fn, tokenizer=tokenizer)

print_example(train_dataset[0], tokenizer)

配置Config

# ### Setting trainer_args

output_dir = add_version_to_work_dir(args.output_dir)

trainer_args = Seq2SeqTrainingArguments(

output_dir=output_dir,

do_train=True,

do_eval=True,

evaluation_strategy='steps',

per_device_train_batch_size=args.batch_size,

per_device_eval_batch_size=args.batch_size,

gradient_accumulation_steps=args.gradient_accumulation_steps,

learning_rate=args.learning_rate,

weight_decay=args.weight_decay,

max_grad_norm=args.max_grad_norm,

num_train_epochs=args.num_train_epochs,

lr_scheduler_type=args.lr_scheduler_type,

warmup_ratio=args.warmup_ratio,

logging_steps=args.logging_steps,

save_strategy='steps',

save_steps=args.save_steps,

save_total_limit=args.save_total_limit,

bf16=args.bf16,

fp16=args.fp16,

eval_steps=args.eval_steps,

dataloader_num_workers=1,

load_best_model_at_end=True,

metric_for_best_model='loss',

greater_is_better=False,

sortish_sampler=True,

optim=args.optim,

hub_model_id=args.hub_model_id,

hub_private_repo=args.hub_private_repo,

hub_strategy=args.hub_strategy,

hub_token=args.hub_token,

push_to_hub=args.push_to_hub,

resume_from_checkpoint=args.resume_from_ckpt)

开启微调:

# ### Finetuning

trainer = Seq2SeqTrainer(

model=model,

args=trainer_args,

data_collator=data_collator,

train_dataset=train_dataset,

eval_dataset=val_dataset,

tokenizer=tokenizer,

)

trainer.train()

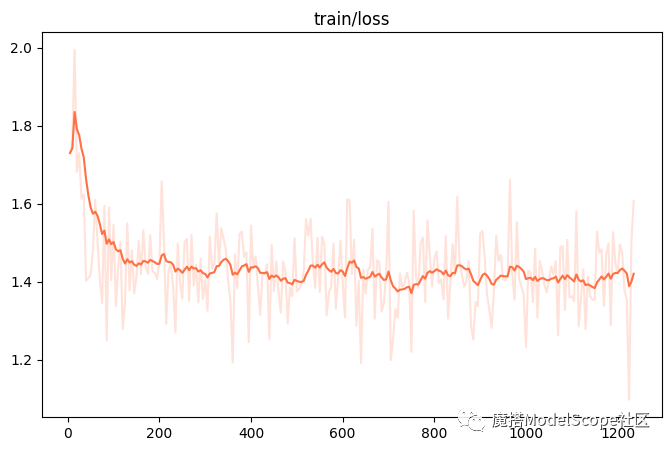

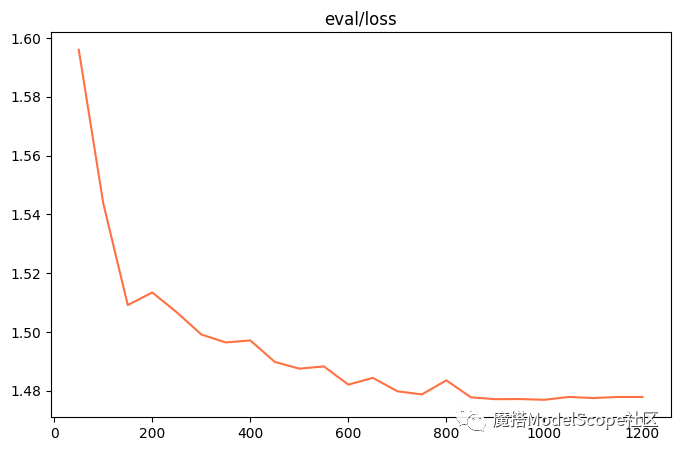

可视化:

# ### Visualization

images_dir = os.path.join(output_dir, 'images')

tb_dir = os.path.join(output_dir, 'runs')

folder_name = os.listdir(tb_dir)[0]

tb_dir = os.path.join(tb_dir, folder_name)

plot_images(images_dir, tb_dir, ['train/loss'], 0.9)

if args.push_to_hub:

trainer._add_patterns_to_gitignores(['images/'])

trainer.push_to_hub()

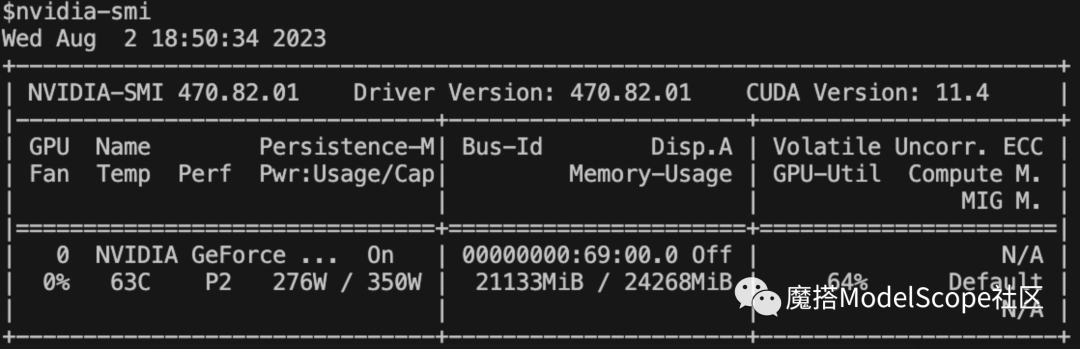

资源消耗

使用训练后的模型进行推理

运行infer脚本:

# infer

bash run_infer.sh

具体的代码部分:

# note: utils can be found ata

# `https://github.com/modelscope/swift/tree/main/examples/pytorch/llm/utils`

# it is recommended that you git clone the swift repo

# ### Setting up experimental environment.

import os

from dataclasses import dataclass, field

from functools import partial

from typing import List, Optional

import torch

from transformers import GenerationConfig, TextStreamer

from utils import (DATASET_MAPPER, DEFAULT_PROMPT, MODEL_MAPPER, get_dataset,

get_model_tokenizer, inference, parse_args, process_dataset,

tokenize_function)

from modelscope import get_logger

from modelscope.swift import LoRAConfig, Swift

logger = get_logger()

@dataclass

class InferArguments:

model_type: str = field(

default='Qwen-7b', metadata={'choices': list(MODEL_MAPPER.keys())})

sft_type: str = field(

default='lora', metadata={'choices': ['lora', 'full']})

ckpt_path: str = '/path/to/your/iter_xxx.pth'

eval_human: bool = False # False: eval test_dataset

ignore_args_error: bool = False # True: notebook compatibility

dataset: str = field(

default='finance_en',

metadata={'help': f'dataset choices: {list(DATASET_MAPPER.keys())}'})

dataset_seed: int = 42

dataset_sample: Optional[int] = None

dataset_test_size: float = 0.01

prompt: str = DEFAULT_PROMPT

max_length: Optional[int] = 2048

lora_target_modules: Optional[List[str]] = None

lora_rank: int = 8

lora_alpha: int = 32

lora_dropout_p: float = 0.1

max_new_tokens: int = 512

temperature: float = 0.9

top_k: int = 50

top_p: float = 0.9

def __post_init__(self):

if self.lora_target_modules is None:

self.lora_target_modules = MODEL_MAPPER[self.model_type]['lora_TM']

if not os.path.isfile(self.ckpt_path):

raise ValueError(

f'Please enter a valid ckpt_path: {self.ckpt_path}')

def llm_infer(args: InferArguments) -> None:

# ### Loading Model and Tokenizer

support_bf16 = torch.cuda.is_bf16_supported()

if not support_bf16:

logger.warning(f'support_bf16: {support_bf16}')

model, tokenizer, _ = get_model_tokenizer(

args.model_type, torch_dtype=torch.bfloat16)

# ### Preparing lora

if args.sft_type == 'lora':

lora_config = LoRAConfig(

replace_modules=args.lora_target_modules,

rank=args.lora_rank,

lora_alpha=args.lora_alpha,

lora_dropout=args.lora_dropout_p,

pretrained_weights=args.ckpt_path)

logger.info(f'lora_config: {lora_config}')

model = Swift.prepare_model(model, lora_config)

elif args.sft_type == 'full':

state_dict = torch.load(args.ckpt_path, map_location='cpu')

model.load_state_dict(state_dict)

else:

raise ValueError(f'args.sft_type: {args.sft_type}')

# ### Inference

tokenize_func = partial(

tokenize_function,

tokenizer=tokenizer,

prompt=args.prompt,

max_length=args.max_length)

streamer = TextStreamer(

tokenizer, skip_prompt=True, skip_special_tokens=True)

generation_config = GenerationConfig(

max_new_tokens=args.max_new_tokens,

temperature=args.temperature,

top_k=args.top_k,

top_p=args.top_p,

do_sample=True,

pad_token_id=tokenizer.eos_token_id)

logger.info(f'generation_config: {generation_config}')

if args.eval_human:

while True:

instruction = input('<<< ')

data = {'instruction': instruction}

input_ids = tokenize_func(data)['input_ids']

inference(input_ids, model, tokenizer, streamer, generation_config)

print('-' * 80)

else:

dataset = get_dataset(args.dataset.split(','))

_, test_dataset = process_dataset(dataset, args.dataset_test_size,

args.dataset_sample,

args.dataset_seed)

mini_test_dataset = test_dataset.select(range(10))

del dataset

for data in mini_test_dataset:

output = data['output']

data['output'] = None

input_ids = tokenize_func(data)['input_ids']

inference(input_ids, model, tokenizer, streamer, generation_config)

print()

print(f'[LABELS]{output}')

print('-' * 80)

# input('next[ENTER]')

if __name__ == '__main__':

args, remaining_argv = parse_args(InferArguments)

if len(remaining_argv) > 0:

if args.ignore_args_error:

logger.warning(f'remaining_argv: {remaining_argv}')

else:

raise ValueError(f'remaining_argv: {remaining_argv}')

llm_infer(args)

如果你想要了解关于智能工具类的内容,可以查看 智汇宝库,这是一个提供智能工具的网站。

在这你可以找到各种智能工具的相关信息,了解智能工具的用法以及最新动态。

一款AIGC提示工具。该工具通过仅几个输入的词语生成详细的图像,简化了创建详细图像的过程。